|

2024-10-25 |

|

Table of Contents

- Preface

- 1. SqlTool

-

- Try It

- Purpose, Coverage, Recent Changes in Behavior

- The Bare Minimum

- Quotes and Spaces

- Embedding

- Non-displayable Types

- Compound commands or commands with semi-colons

- Desktop shortcuts

- Loading sample data

- Satisfying SqlTool's CLASSPATH Requirements

- RC File Authentication Setup

- Switching Data Sources

- Using Inline RC Authentication

- Logging

- Interactive Usage

- Command Types

- Special Commands

- Edit Buffer / History Commands

- PL Commands

- Non-Interactive

- Generating Text or HTML Reports

- Storing and Retrieving Binary Files

- SqlTool Procedural Language

- Chunking

- Raw Mode

- SQL/PSM, SQL/JRT, and PL/SQL

- Delimiter-Separated-Value Imports and Exports

- CSV Imports and Exports

- Unit Testing SqlTool

- 2. Hsqldb Test Utility

- 3. Database Manager

- 4. Transfer Tool

- A. SqlTool System PL Variables

- B. HyperSQL File Links

List of Tables

List of Examples

- 1.1. Sample RC File

- 1.2. Default parameter value with optional user-specified override

- 1.3. Piping input into SqlTool

- 1.4. Redirecting input into SqlTool

- 1.5. Error-handling Idiom

- 1.6. Sample HTML Report Generation Script

- 1.7. Inserting binary data into database from a file

- 1.8. Downloading binary data from database to a file

- 1.9. Example to avoid accidental Comment

- 1.10. Explicit null and empty-string Tests

- 1.11. Special values for ?, and _ (or ~) Variables

- 1.12. Creating a SqlTool Function

- 1.13. Invoking a SqlTool Function

- 1.14. Simple SQL file using PL

- 1.15. Inline If Statement

- 1.16. SQL File showing use of most PL features

- 1.17. Interactive Raw Mode example

- 1.18. PL/SQL Example

- 1.19. SQL/JRT Example

- 1.20. SQL/PSM Example

- 1.21. DSV Export Example

- 1.22. DSV Import Example

- 1.23. DSV Export of an Arbitrary Query

- 1.24. Sample DSV headerswitch settings

- 1.25. DSV targettable setting

- 1.26. Sample CSV export + import script

Table of Contents

If you notice any mistakes in this document, please email the author listed at the beginning of the chapter. If you have problems with the procedures themselves, please use the HSQLDB support facilities which are listed at http://hsqldb.org/support.

This document is available in several formats.

You may be reading this document right now at http://hsqldb.org/doc/2.0, or in a distribution somewhere else. I hereby call the document distribution from which you are reading this, your current distro.

http://hsqldb.org/doc/2.0 hosts the latest production versions of all available formats. If you want a different format of the same version of the document you are reading now, then you should try your current distro. If you want the latest production version, you should try http://hsqldb.org/doc/2.0.

Sometimes, distributions other than http://hsqldb.org/doc/2.0 do not host all available formats. So, if you can't access the format that you want in your current distro, you have no choice but to use the newest production version at http://hsqldb.org/doc/2.0.

Table 1. Available formats of this document

| format | your distro | at http://hsqldb.org/doc/2.0 |

|---|---|---|

| Chunked HTML | index.html | http://hsqldb.org/doc/2.0/util-guide/ |

| All-in-one HTML | util-guide.html | http://hsqldb.org/doc/2.0/util-guide/util-guide.html |

| util-guide.pdf | http://hsqldb.org/doc/2.0/util-guide/util-guide.pdf |

If you are reading this document now with a standalone PDF reader,

your distro links may not work.

Table of Contents

- Try It

- Purpose, Coverage, Recent Changes in Behavior

- The Bare Minimum

- Quotes and Spaces

- Embedding

- Non-displayable Types

- Compound commands or commands with semi-colons

- Desktop shortcuts

- Loading sample data

- Satisfying SqlTool's CLASSPATH Requirements

- RC File Authentication Setup

- Switching Data Sources

- Using Inline RC Authentication

- Logging

- Interactive Usage

- Command Types

- Special Commands

- Edit Buffer / History Commands

- PL Commands

- Non-Interactive

- Generating Text or HTML Reports

- Storing and Retrieving Binary Files

- SqlTool Procedural Language

- Chunking

- Raw Mode

- SQL/PSM, SQL/JRT, and PL/SQL

- Delimiter-Separated-Value Imports and Exports

- CSV Imports and Exports

- Unit Testing SqlTool

If you know how to type in a Java command at your shell command line,

and you know at least the most basic SQL commands, then you know

enough to benefit from SqlTool.

You can play with Java system properties, PL variables, math, and

other things by just executing

this sqltool-2.7.4.jar file.

But SqlTool was made for JDBC, so you should download HyperSQL to

have SqlTool automatically connect to a fully functional, pure Java

database; or obtain a JDBC driver for any other SQL database that you

have an account in.

If HyperSQL's hsqldb.jar resides in the same

directory as the SqlTool jar file, then you can connect up to a

HyperSQL instance from SqlTool just by specifying the JDBC URL, root

account user name of SA and empty password, with

the \j command.

See the

Switching Data Sources

section below for details about \j.

![[Note]](../images/db/note.png) | Note |

|---|---|

|

Due to many important improvements to SqlTool, both in terms of stability and features, all users of SqlTool are advised to use the latest version of SqlTool, even if your database instances run with an older HSQLDB version. How to do this is documented in the Accessing older HSQLDB Databases with SqlTool section below. |

This document explains how to use SqlTool, the main purpose of

which is to read your SQL text file or stdin, and execute the SQL

commands therein against a JDBC database.

There are also a great number of features to facilitate both

interactive use and automation.

The following paragraphs explain in a general way why SqlTool is

better than any existing tool for text-mode interactive SQL work,

and for automated SQL tasks.

Two important benefits which SqlTool shares with other pure Java

JDBC tools is that users can use a consistent interface and

syntax to interact with a huge variety of databases-- any

database which supports JDBC; plus the tool itself runs on any

Java platform.

Instead of using isql for Sybase,

psql for Postgresql,

Sql*plus for Oracle, etc., you can

use SqlTool for all of them.

As far as I know, SqlTool is the only production-ready, pure

Java, command-line, generic JDBC client.

Several databases come with a command-line client with limited

JDBC abilities (usually designed for use with just their specific

database).

![[Important]](../images/db/important.png) | Use the In-Program Help! |

|---|---|

|

The SqlTool commands and settings are intuitive once you are

familiar with the usage idioms.

This Guide does not attempt to list every SqlTool command and

option available.

When you want to know what SqlTool commands or options are available

for a specific purpose, you need to list the commands of the

appropriate type with the relevant "?" command.

For example, as explained below, to see all Special commands, you

would run |

SqlTool is purposefully not a Gui tool like Toad or DatabaseManager. There are many use cases where a Gui SQL tool would be better. Where automation is involved in any way, you really need a text client to at least test things properly and usually to prototype and try things out. A command-line tool is really better for executing SQL scripts, any form of automation, direct-to-file fetching, and remote client usage. To clarify this last, if you have to do your SQL client work on a work server on the other side of a VPN connection, you will quickly appreciate the speed difference between text data transmission and graphical data transmission, even if using VNC or Remote Console. Another case would be where you are doing some repetitive or very structured work where variables or language features would be useful. Gui proponents may disagree with me, but scripting (of any sort) is more efficient than repetitive copy & pasting with a Gui editor. SqlTool starts up very quickly, and it takes up a tiny fraction of the RAM required to run a comparably complex Gui like Toad.

SqlTool is superior for interactive use because over many years it

has evolved lots of features proven to be efficient for day-to-day

use.

Four concise in-program help commands (\?,

:?, *?

and /?) list all

available commands of the corresponding type.

SqlTool doesn't support up-arrow or other OOB escapes (due to basic

Java I/O limitations), but it more than makes up for this limitation

with macros, user variables, command-line history and recall, and

command-line editing with extended Perl/Java regular expressions.

The \d commands deliver JDBC metadata information as consistently as

possible (in several cases, database-specific work-arounds are used

to obtain the underlying data even though the database doesn't

provide metadata according to the JDBC specs).

Unlike server-side language features, the same feature set works

for any database server.

Database access details may be supplied on the command line, but

day-to-day users will want to centralize JDBC connection details

into a single, protected RC file.

You can put connection details (username, password, URL, and other

optional settings) for scores of target databases into your RC file,

then connect to any of them whenever you want by just giving

SqlTool the ID ("urlid") for that database.

When you Execute SqlTool interactively, it behaves by default

exactly as you would want it to.

If errors occur, you are given specific error messages and you

can decide whether to roll back your session.

You can easily change this behavior to auto-commit,

exit-upon-error, etc., for the current session or for all

interactive invocations.

You can import or export delimiter-separated-value files.

If you need to run a specific statement repeatedly, perhaps changing

the WHERE clause each time, it is very simple to define a macro.

When you Execute SqlTool with a SQL script, it also behaves by

default exactly as you would want it to.

If any error is encountered, the connection will be rolled back,

then SqlTool will exit with an error exit value.

If you wish, you can detect and handle error (or other) conditions

yourself.

For scripts expected to produce errors (like many scripts provided

by database vendors), you can have SqlTool continue-upon-error.

For SQL script-writers, you will have access to portable scripting

features which you've had to live without until now.

You can use variables set on the command line or in your script.

You can handle specific errors based on the output of SQL commands

or of your variables.

You can chain SQL scripts, invoke external programs, dump data

to files, use prepared statements,

Finally, you have a procedural language with if,

foreach, while,

continue, and break statements.

SqlTool runs on any Java 11 or later platform. I know that SqlTool works well with Sun and OpenJDK JVMs. I haven't run other vendors' JVMs in years (IBM, JRockit, etc.). As my use with OpenJDK proves that I don't depend on Sun-specific classes, I expect it to work well with other Java implementations. A version of the jar compiled with JDK 8 is also included.

SqlTool no longer writes any files without being explicitly instructed to. Therefore, it should work fine on read-only systems, and you'll never have orphaned temp files left around.

The command-line examples in this chapter work as given on all

platforms (if you substitute in a normalized path in place of

$HSQLDB_HOME), except where noted otherwise.

When doing any significant command-line work on Windows

(especially shell scripting), you're better off to completely

avoid paths with spaces or funny characters.

If you can't avoid it, use double-quotes and expect problems.

As with any Java program, file or directory paths on the command

line after "java" can use forward slashes instead of back slashes

(this goes for System properties and the

CLASSPATH variable too).

I use forward slashes because they can be used consistently, and

I don't have to contort my fingers to type them :).

If you are using SqlTool from a HyperSQL distribution of version 2.2.5 or earlier, you should use the documentation with that distribution, because this manual documents many new features, several significant changes to interactive-only commands, and a few changes effecting backwards-compatibility (see next section about that). This document is now updated for the current versions of SqlTool and SqlFile at the time I am writing this (versions 6632 and 6559 correspondingly-- SqlFile is the class which actually processes the SQL content for SqlTool). Therefore, if you are using a version of SqlTool or SqlFile that is more than a couple revisions greater, you should find a newer version of this document. (The imprecision is due to content-independent revision increments at build time, and the likelihood of one or two behavior-independent bug fixes after public releases). The startup banner will report both versions when you run SqlTool interactively. (Dotted version numbers of SqlTool and SqlFile definitely indicate ancient versions).

This guide covers SqlTool as bundled with HSQLDB after 2.2.5. [1]

This section lists changes to SqlTool since the last major release of HSQLDB which may effect the portability of SQL scripts. For this revision of this document, this list consists of script-impacting changes made to SqlTool after the 2.3.3 HyperSQL release.

-

Leading and trailing white-space of special command arguments

are no longer generally ignored.

If you don't want to give a parameter to a special command,

then don't include any extra whitespace.

This was changed so that \p commands can output whitespace or

strings beginning or ending with whitespace, without having

\p parse parameters differently from every other special

command.

Whenever you give a parameter to a special command, a single

white-space character is used just to separate command from

argument(s) and then the remainder is the argument(s).

Some specific special commands will then trim the

arguments captured this way, but \p commands will not.

For example, a single space or tab is required after \p or

\pr, and then

the rest of the string is precisely what will be output.

For example, command "

\p one" would output "one"; and "\p two" would output "two"; and "\p" would output " - Warnings are only displayed in interactive mode if the message is reporting about intended behavior. For example, warnings that a work-around is being used to distinguish system objects, or that the database object isn't returning a meaningful value. Script designers will learn these things when designing their scripts and running the commands interactively. For automated usage, these scripts clutter output and would have to be filtered out.

To reduce duplication, new features are listed in the Recent Functional Changes section are not repeated here, so check that list too.

- RC Data file 'urlid' field values are now comma-delimited list matched against candidate urlids. This is downward-compatible since a single entry without metacharacters will still require an exact match of the candidate string. This allows for specifying defaults with precedence and eliminates redundancy for maximum conciseness.

- Input files may now be specified by URL. This applies to specification of SQL script files on the command-line, as well as data imports (DSV, XML, and binary), and @ construct relative paths from them correctly.

- Special command \p now has a 'n' option that doesn't output a line delimiter. This is similar to UNIX echo's -n switch or \c escape.

- Added System PL Variables *SCRIPT, *SCRIPT_FILE, *SCRIPT_BASE, *HOST, *HOSTNAME, *ROWS. See SqlTool System PL Variables appendix.

![[Warning]](../images/db/warning.png) | Warning |

|---|---|

|

If you are using an Oracle database server, it will commit your current transaction if you cleanly disconnect, regardless of whether you have set auto-commit or not. This will occur if you exit SqlTool (or any other client) in the normal way (as opposed to killing the process or using Ctrl-C, etc.). This is mentioned in this section only for brevity, so I don't need to mention it in the main text in the many places where auto-commit is discussed. This behavior has nothing to do with SqlTool. It is a quirk of Oracle. |

If you want to use SqlTool, then you either have an SQL text file, or you want to interactively type in SQL commands. If neither case applies to you, then you are probably looking at the wrong program.

Procedure 1.1. To run SqlTool...

-

Copy the file

sample/sqltool.rc[1] of your HyperSQL distribution to your home directory and secure access to it if your computer is accessible to anybody else (most likely from the network). This file will work as-is for a Memory Only database instance; or if your target is a HyperSQL Server running on your local computer with default settings and the password for the "SA" account is blank (the SA password is blank when new HyperSQL database instances are created). Edit the file if you need to change the target Server URL, username, password, character set, JDBC driver, or TLS trust store as documented in the RC File Authentication Setup section. You could, alternatively, use the--inlineRccommand-line switch or the \j special command to connect up to a data source, as documented below. -

Find out where your

sqltool.jarfile resides. It typically resides atHSQLDB_HOME/lib/sqltool.jarwhereHSQLDB_HOMEis the "hsqldb" directory inside the root level of your HyperSQL software installation. (For example, if you extracthsqldb-9.1.0.zipintoc:\temp, yourHSQLDB_HOMEwould bec:/temp/hsqldb-9.1.0/hsqldb. Your file may also have a version label in the file name, likesqltool-1.2.3.4.jar. The forward slashes work just fine on Windows). For this reason, I'm going to use "$HSQLDB_HOME/lib/sqltool.jar" as the path tosqltool.jarfor my examples, but understand that you need to use the actual path to your ownsqltool.jarfile. (Unix users may set a real env. variable if they wish, in which case the examples may be used verbatim; Window users may do the same, but will need to dereference the variables like%THIS%instead of like$THIS).![[Warning]](../images/db/warning.png)

Warning My examples assume there are no spaces or funky characters in your file paths. This avoids bugs with the Windows cmd shell and makes for simpler syntax all-around. If you insist on using directories with spaces or shell metacharacters (including standard Windows home directories like

C:\Documents and Settings\blaine), you will need to double-quote arguments containing these paths. (On UNIX you can alternatively use single-quotes to avoid variable dereferencing at the same time). -

If you are just starting with SqlTool, you are best off running your SqlTool command from a shell command-line (as opposed to by using icons or the Windows' or ). This way, you will be sure to see error messages if you type the command wrong or if SqlTool can't start up for some reason. On recent versions of Windows, you can get a shell by running

cmdfrom or ). On UNIX or Linux, any real or virtual terminal will work.On your shell command line, run

java -jar $HSQLDB_HOME/lib/sqltool.jar --help

to see what command-line arguments are available. Note that you don't need to worry about setting the

CLASSPATHwhen you use the-jarswitch tojava.To run SqlTool without a JDBC connection, run

java -jar $HSQLDB_HOME/lib/sqltool.jar

You won't be able to execute any SQL, but you can play with the SqlTool interface (including using PL features).

To execute SQL, you'll need the classes for the target database's JDBC driver (and database engine classes for in-process databases). As this section is titled The Bare Minimum, I'll just say that if you are running SqlTool from a HyperSQL product installation, you are all set to connect to any kind of HyperSQL database. This is because SqlTool will look for the file

hsqldb.jarin the same directory assqltool.jar, and that file contains all of the needed classes. (SqlTool supports all JDBC databases and does not require a HyperSQL installation, but these cases would take us beyond the bare minimum). So, withhsqldb.jarin place, you can run commands likejava -jar $HSQLDB_HOME/lib/sqltool.jar mem

for interactive use, or

java -jar $HSQLDB_HOME/lib/sqltool.jar --sql="SQL statement;" mem

or

java -jar $HSQLDB_HOME/lib/sqltool.jar mem filepath1.sql...

where

memis an urlid, and the following arguments are paths to text SQL files. Filepath may be a local file path that can use whatever wildcards your operating system shell supports; or a URL.The urlid

memin these commands is a key into your RC file, as explained in the RC File Authentication Setup section. Since this is a mem: type catalog, you can use SqlTool with this urlid immediately with no database setup whatsoever (however, you can't persist any changes that you make to this database). The sample sqltool.rc file also defines the urlid "localhost-sa" for a local HyperSQL Listener. At the end of this section, I explain how you can load some sample data to play with, if you want to.

![[Tip]](../images/db/tip.png) | Tip |

|---|---|

|

If SqlTool fails to connect to the specified urlid and you don't

know why, add the invocation parameter |

![[Important]](../images/db/important.png) | You are responsible for Commit behavior |

|---|---|

|

SqlTool does not commit SQL changes by default.

(You can use the |

If you put a file named auto.sql into your

home directory, this file will be executed automatically every

time that you run SqlTool interactively (unless you invoke with

the --noAutoFile switch).

I did say interactively: If you want to

execute this file when you execute SQL scripts from the command

line, then your script must use

\i ${user.home}/auto.sql or similar to invoke

it explicitly.

To use a JDBC Driver other than the HyperSQL driver, you can't use

the -jar switch because you need to modify the

classpath.

You must add the sqltool.jar file and your JDBC

driver classes to your classpath,

and you must tell SqlTool what the JDBC driver class name is.

The latter can be accomplished by either using the "--driver"

switch, or setting "driver" in your config file.

The RC File Authentication Setup section.

explains the second method. Here's an example of the first method

(after you have set the classpath appropriately).

java org.hsqldb.cmdline.SqlTool --driver=oracle.jdbc.OracleDriver urlid

![[Tip]](../images/db/tip.png) | Tip |

|---|---|

|

If the tables of query output on your screen are all messy because of lines wrapping, the best and easiest solution is usually to resize your terminal emulator window to make it wider. (With some terms you click & drag the frame edges to resize, with others you use a menu system where you can enter the number of columns). |

Single and double-quotes are not treated specially by SqlTool. This makes SqlTool more intuitive than most shell languages, ensures that quotes sent to the database engine are not adulterated, and eliminates the need for somehow escaping quote characters.

Line delimiters are special, as that is the primary means for SqlTool

to tell when a command is finished (requiring combination with

semi-colon to support multi-line SQL statements).

Spaces and tabs are preserved inside of your strings and variable

values, but are trimmed from the beginning in nearly all cases

(such space having very rare usefulness).

The cases where leading whitespace is preserved exactly as specified

in your strings are the : commands (including

* VARNAME :,

/: VARNAME, \x :, and

\xq :).

So, if you write the SQL command

INSERT into t values ('one '' and '' two');

or the SqlTool print command

\p A message for my 'Greatest... fan'

you just type exactly what you want to send to the database, or what you want displayed.

To repeat what is stated in the JavaDoc for the

SqlTool

class itself:

Programmatic users will usually want to use the

objectMain(String[]) method if they want arguments and behavior

exactly like command-line SqlTool. If you don't need invocation

parameter parsing, auto.sql execution, etc.,

you will have more control and efficiency by using the SqlFile

class directly. The file

src/org/hsqldb/sample/SqlFileEmbedder.java

in the HyperSQL distribution provides an example for this latter

strategy.

There are some SQL types which SqlTool (being a text-based

program) can't display properly.

This includes the SQL types BLOB,

JAVA_OBJECT, STRUCT,

and OTHER.

When you run a query that returns any of these, SqlTool will

save the very first such value obtained to the binary buffer

and will not display any output from this query.

You can then save the binary value to a file, as explained in the

Storing and Retrieving Binary Files

section.

There are other types, such as BINARY, which

JDBC can make displayable (by using ResultSet.getString()), but

which you may very well want to retrieve in raw binary format.

You can use the \b command to retrieve any-column-type-at-all

in raw binary format (so you can later store the value to a

binary file).

Another restriction which all text-based database clients have is the practical inability for the user to type in binary data such as photos, audio streams, and serialized Java objects. You can use SqlTool to load any binary object into a database by telling SqlTool to get the insert/update datum from a file. This is also explained in the Storing and Retrieving Binary Files section.

See the Chunking section if you need to execute any compound SQL commands or SQL commands containing non-escaped/quoted semi-colons.

Desktop shortcuts and quick launch icons are useful, especially

if you often run SqlTool with the same set of arguments.

It's really easy to set up several of them-- one for each

way that you invoke SqlTool (i.e., each one would start

SqlTool with all the arguments for one of your typical startup

needs).

One typical setup is to have one shortcut for each database

account which you normally use (use a different

urlid argument in each shortcut's

Target specification.

Desktop icon setup varies depending on your Desktop manager, of course. I'll explain how to set up a SqlTool startup icon in Windows XP. Linux and Mac users should be able to take it from there, since it's easier with the common Linux and Mac desktops.

Procedure 1.2. Creating a Desktop Shortcut for SqlTool

-

Right click in the main Windows background.

-

-

-

-

Navigate to where your good JRE lives. For recent Sun JRE's, it installs to

C:\Program Files\Java\*\binby default (the * will be a JDK or JRE identifier and version number). -

Select

java.exe. -

-

-

Enter any name

-

-

Right click the new icon.

-

-

Edit the Target field.

-

Leave the path to java.exe exactly as it is, including the quotes, but append to what is there. Beginning with a space, enter the command-line that you want run.

-

to a pretty icon.

-

If you want a quick-launch icon instead of (or in addition to) a desktop shortcut icon, click and drag it to your quick launch bar. (You may or may not need to edit the Windows Toolbar properties to let you add new items). Postnote: Quick launch setup has become more idiosyncratic on the more recent versions of Windows, sometimes requiring esoteric hacks to make them in some cases. So, if the instructions here don't work, you'll have to seek help elsewhere.

If you want some sample database objects and data to play

with, execute the

sample/sampledata.sql SQL file

[1].

To separate the sample data from your regular data, you can

put it into its own schema by running this before you import:

CREATE SCHEMA sampledata AUTHORIZATION dba; SET SCHEMA sampledata;

Run it like this from an SqlTool session

\i HSQLDB_HOME/sample/sampledata.sql

where HSQLDB_HOME is the base directory of your HSQLDB software installation [1].

For memory-only databases, you'll need to run this every time that you run SqlTool. For other (persistent) databases, the data will reside in your database until you drop the tables.

As discussed earlier, only the single file

sqltool.jar is required to run SqlTool (the file

name may contain a version label like

sqltool-1.2.3.4.jar).

But it's useless as an SQL Tool unless you can

connect to a JDBC data source, and for that you need the target

database's JDBC driver in the classpath.

For in-process catalogs, you'll also need the

database engine classes in the CLASSPATH.

The The Bare Minimum

section explains that the easiest way to use SqlTool with any HyperSQL

database is to just use sqltool.jar in-place where

it resides in a HyperSQL installation.

This section explains how to satisfy the CLASSPATH requirements for

other setups and use cases.

If you are using SqlTool to access non-HSQLDB database(s), then you should use the latest and greatest-- just grab the newest public release of SqlTool (like from the latest public HyperSQL release) and skip this subsection.

You are strongly encouraged to use the latest SqlTool release to access older HSQLDB databases, to enjoy greatly improved SqlTool robustness and features. It is very easy to do this.

-

Obtain the latest

sqltool.jarfile. One way to obtain the latestsqltool.jarfile is to download the latest HyperSQL distribution and extract that single file -

Place (or copy) your new

sqltool.jarfile right alongside thehsqldb.jarfile for your target database version. If you don't have a local copy of thehsqldb.jarfile for your target database, just copy it from your database server, or download the full distribution for that server version and extract it. -

(If you have used older versions of SqlTool before, notice that you now invoke SqlTool by specifying the

sqltool.jarfile instead of thehsqldb.jar). If your target database is a previous 2.x version of HyperSQL, then you are finished and can use the new SqlTool for your older database. Users upgrading from a pre-2.x version please continue...Run SqlTool like this.

java -jar path/to/sqltool.jar --driver=org.hsqldb.jdbcDriver...

where you specify the pre-2.x JDBC driver name

org.hsqldb.jdbcDriver. Give any other SqlTool parameters as you usually would.Once you have verified that you can access your database using the

--driverparameter as explained above, edit yoursqltool.rcfile, and add a new linedriver org.hsqldb.jdbcDriver

after each urlid that is for a pre-2.x database. Once you do this, you can invoke SqlTool as usual (i.e. you no longer need the

--driverargument for your invocations).

For these situations, you need to add your custom, third-party, or

SQL driver classes to your Java CLASSPATH.

Java doesn't support adding arbitrary elements to the classpath when

you use the -jar, so you must set a classpath

containing sqltool.jar plus whatever else you

need, then invoke SqlTool without the -jar switch,

as briefly described at the end of the

The Bare Minimum

section.

For embedded apps, invoke your own main class instead of SqlTool, and

you can invoke SqlTool or

SqlFile from your code base.

To customize the classpath,

you need to set up your classpath by using your

operating system or shell variable CLASSPATH or

by using the java switch -cp

(or the equivalent -classpath).

I'm not going to take up space here to explain how to set up a

Java CLASSPATH. That is a platform-dependent task that is

documented well in tons of Java introductions and tutorials.

What I'm responsible for telling you is what

you need to add to your classpath.

For the non-embedded case where you have set up your CLASSPATH

environmental variable, you would invoke SqlTool like this.

java org.hsqldb.cmdline.SqlTool ...

If you are using the -cp switch instead of a

CLASSPATH variable, stick it after

java.

After "SqlTool", give any SqlTool parameters

exactly as you would put after

java -jar .../sqltool.jar if you didn't need to

customize the CLASSPATH.

You can specify a JDBC driver class to use either with the

--driver switch to SqlTool, or in your

RC file stanza (the last method is usually more convenient).

Note that without the -jar switch, SqlTool will

still automatically pull in HyperSQL JDBC driver or engine classes

from HyperSQL jar files in the same directory.

It's often a good practice to minimize your runtime classpath.

To prevent the possibility of pulling in classes from other HyperSQL

jar files, just copy sqltool.jar to some other

directory (which does not contain other HyperSQL jar files) and put

the path to that one in your classpath.

You can distribute SqlTool along with your application, for

standalone or embedded invocation.

For embedded use, you will need to customize the classpath as

discussed in the previous item.

Either way, you should minimize your application footprint by

distributing only those HyperSQL jar files needed by your app.

You will obviously need sqltool.jar if you will

use the SqlTool or

SqlFile class in any way.

If your app will only connect to external HyperSQL listeners, then

build and include hsqljdbc.jar.

If your app will also run a HyperSQL Listener,

you'll need to include hsqldb.jar.

If your app will connect directly to a

in-process catalog, then include

hsqldbmain.jar.

Note that you never need to include more than one of

hsqldb.jar, hsqldbmain.jar,

hsqljdbc.jar, since the former jars include

everything in the following jars.

If you just want to be able to run SqlTool (interactively or

non-interactively) on a PC, and have no need for documentation, then

it's usually easiest to just copy

sqltool.jar and hsqldb.jar

to the PCs (plus JDBC driver jars for any other target databases).

If you want to minimize what you distribute, then build and

distribute hsqljdbc.jar or

hsqldbmain.jar instead of

hsqldb.jar, according to the criteria listed in

the previous sub-section.

RC file authentication setup is accomplished by creating a text RC configuration file. In this section, when I say configuration or config file, I mean an RC configuration file. RC files can be used by any JDBC client program that uses the org.hsqldb.util.RCData class-- this includes SqlTool, DatabaseManager, DatabaseManagerSwing.

You can use it for your own JDBC client programs too.

There is example code showing how to do this at

src/org/hsqldb/sample/SqlFileEmbedder.java.

The sample RC file shown here resides at

sample/sqltool.rc

in your HSQLDB distribution

[1].

The file consists of comments and blank lines,

urlid patterns that are matched against,

and assignments (all other lines).

A stanza is a block of lines from one urlid line until before the

next urlid line (or end of file).

Example 1.1. Sample RC File

# $Id: sqltool.rc 6381 2021-11-18 21:45:56Z unsaved $

# This is a sample RC configuration file used by SqlTool, DatabaseManager,

# and any other program that uses the org.hsqldb.lib.RCData class.

# See the documentation for SqlTool for various ways to use this file.

# This is not a Java Properties file. It uses a custom format with stanzas,

# similar to .netrc files.

# If you have the least concerns about security, then secure access to

# your RC file.

# You can run SqlTool right now by copying this file to your home directory

# and running

# java -jar /path/to/sqltool.jar mem

# This will access the first urlid definition below in order to use a

# personal Memory-Only database.

# "url" values may, of course, contain JDBC connection properties, delimited

# with semicolons.

# As of revision 3347 of SqlFile, you can also connect to datasources defined

# here from within an SqlTool session/file with the command "\j urlid".

# You can use Java system property values in this file like this: ${user.home}

# Windows users are advised to use forward slashes instead of back-slashes,

# and to avoid paths containing spaces or other funny characters. (This

# recommendation applies to any Java app, not just SqlTool).

# It is a runtime error to do a urlid lookup using RCData class and to not

# match any stanza (via urlid pattern) in this file.

# Three features added recently. All are downward-compatible.

# 1. urlid field values in this file are now comma-separated (with optional

# whitespace before or after the commas) regular expressions.

# 2. Each individual urlid token value (per previous bullet) is a now a regular

# expression pattern that urlid lookups are compared to. N.b. patterns must

# match the entire lookup string, not just match "within" it. E.g. pattern

# of . would match lookup candidate "A" but not "AB". .+ will always match.

# 3. Though it is still an error to define the same exact urlid value more

# than once in this file, it is allowed (and useful) to have a url lookup

# match more than one urlid pattern and stanza. Assignments are applied

# sequentially, so you should generally add default settings with more

# liberal patterns, and override settings later in the file with more

# specific (or exact) patterns.

# Since service discovery works great in all JREs for many years now, I

# have removed all 'driver' specifications here. JRE discover will

# automatically resolve the driver class based on the JDBC URL format.

# Most people use default ports, so I have removed port specification from

# examples except for Microsoft's Sql Server driver where you can't depend

# on a default port.

# In all cases, to specify a non-default port, insert colon and port number

# after the hostname or ip address in the JDBC URL, like

# jdbc:hsqldb:hsql://localhost:9977 or

# jdbc:sqlserver://hostname.admc.com:1433;databaseName=dbname

# Amazon Aurora instances are access from JDBC exactly the same as the

# non-Aurora RDS counterpart.

# For using any database engine other than HyperSQL, you must add the

# JDBC jar file and the SqlTool jar to your CLASSPATH then run a command like:

# java org.hsqldb.util.SqlTool...

# I.e., the "-jar" switch doesn't support modified classpath.

# (See SqlTool manual for how to do same thing using Java modules.)

# To oversimplify for non-developers, the two most common methods to set

# CLASSPATH for an executable tool like SqlTool are to either use the java

# "-cp" switch or set environmental variable CLASSPATH.

# Windows users can use graphical UI or CLI "set". Unix shell users must

# "export" in addition to assigning.

#

# All JDBC jar files used in these examples are available from Maven

# repositories. You can also get them from vendor web sites or with product

# bundles (especially database distributions).

# Most databases provide multiple variants. Most people will want a type 4

# driver supporting your connection mechanism (most commonly TCP/IP service,

# but also database file access and others) and your client JRE version.

# By convention the variants are distinguished in segments of the jar file

# name before the final ".jar" .

# Global default. .+ matches all lookups:

urlid .+

username SA

password

# A personal Memory-Only (non-persistent) database.

# Inherits username and password from default setting above.

urlid mem

url jdbc:hsqldb:mem:memdbid

# A personal, local, persistent database.

# Inherits username and password from default setting above.

urlid personal

url jdbc:hsqldb:file:${user.home}/db/personal;shutdown=true;ifexist=true

transiso TRANSACTION_READ_COMMITTED

# When connecting directly to a file database like this, you should

# use the shutdown connection property like this to shut down the DB

# properly when you exit the JVM.

# This is for a hsqldb Server running with default settings on your local

# computer (and for which you have not changed the password for "SA").

# Inherits username and password from default setting above.

# Default port 9001

urlid localhost-sa

url jdbc:hsqldb:hsql://localhost

# Template for a urlid for an Oracle database.

# Driver jar files from this century have format like "ojbc*.jar".

# Default port 1521

urlid localhost-sa

# Avoid older drivers because they have quirks.

# You could use the thick driver instead of the thin, but I know of no reason

# why any Java app should.

#urlid cardiff2

# Can identify target database with either SID or global service name.

#url jdbc:oracle:thin:@//centos.admc.com/tstsid.admc

#username blaine

#password asecret

# Template for a TLS-encrypted HSQLDB Server.

# Remember that the hostname in hsqls (and https) JDBC URLs must match the

# CN of the server certificate (the port and instance alias that follows

# are not part of the certificate at all).

# You only need to set "truststore" if the server cert is not approved by

# your system default truststore (which a commercial certificate probably

# would be).

# Port defaults to 554.

#urlid tls

#url jdbc:hsqldb:hsqls://db.admc.com:9001/lm2

#username BLAINE

#password asecret

#truststore ${user.home}/ca/db/db-trust.store

# Template for a Postgresql database

# Driver jar files are of format like "postgresql-*.jar"

# Port defaults to 5432.

#urlid blainedb

#url jdbc:postgresql://idun.africawork.org/blainedb

#username blaine

#password asecret

# Amazon RedShift (a fork of Postgresql)

# Driver jar files are of format like "redshift-jdbc*.jar"

# Port defaults to 5439.

#urlid redhshift

#url jdbc:redshift://clustername.hex.us-east-1.redshift.amazonaws.com/dev

#username awsuser

#password asecret

# Template for a MySQL database. MySQL has poor JDBC support.

# The latest driver jar files are of format like "mysql-jdbc*.jar", but not

# long ago they were like "mysql-connector-java*.jar".

# Port defaults to 3306

#urlid mysql-testdb

#url jdbc:mysql://hostname/dbname

#username root

#password asecret

# Alternatively, you can access MySQL using jdbc:mariadb URLs and driver.

# Note that "databases" in SQL Server and Sybase are traditionally used for

# the same purpose as "schemas" with more SQL-compliant databases.

# Template for a Microsoft SQL Server database using Microsoft's Driver

# Seems that some versions default to port 1433 and others to 1434.

# MSDN implies instances are port-specific, so can specify port or instname.

#urlid msprojsvr

# Driver jar files are of format like "mssql-jdbc-*.jar".

# Don't use older MS JDBC drivers (like SQL Server 2000 vintage) because they

# are pitifully incompetent, handling transactions incorrectly.

# I recommend that you do not use Microsoft's nonstandard format that

# includes backslashes.

#url jdbc:sqlserver://hostname;instanceName=instname;databaseName=dbname

# with port:

#url jdbc:sqlserver://hostname:1433;instanceName=instname;databaseName=dbname

#username myuser

#password asecret

# Template for Microsoft SQL Server database using the JTDS Driver

# Looks like this project is no longer maintained, so you may be better off

# using the Microsoft driver above.

# http://jtds.sourceforge.net Jar file has name like "jtds-1.3.1.jar".

# Port defaults to 1433.

# MSDN implies instances are port-specific, so can specify port or instname.

#urlid nlyte

#username myuser

#password asecret

#url jdbc:jtds:sqlserver://myhost/nlyte;instance=MSSQLSERVER

# Where database is 'nlyte' and instance is 'MSSQLSERVER'.

# N.b. this is diff. from MS tools and JDBC driver where (depending on which

# document you read), instance or database X are specified like HOSTNAME\X.

# Template for a Sybase database

#urlid sybase

#url jdbc:sybase:Tds:hostname:4100/dbname

#username blaine

#password asecret

# This is for the jConnect driver (requires jconn3.jar).

# Derby / Java DB.

# Please see the Derby JDBC docs, because they have changed the organization

# of their driver jar files in recent years. Combining that with the different

# database types supported and jar file classpath chaining, and it's not

# feasible to document it adequately here.

# I'll just give one example using network service, which works with 10.15.2.0.

# Put files derbytools*.jar, derbyclient*.jar, derbyshared*.jar into a

# directory and include the path to the derbytools.jar in your classpath.

# Port defaults to 1527.

#url jdbc:derby://server:<port>/databaseName

#username ${user.name}

#password any_noauthbydefault

# If you get the right classes into classpath, local file URLs are like:

#url jdbc:derby:path/to/derby/directory

# You can use \= to commit, since the Derby team decided (why???)

# not to implement the SQL standard statement "commit"!!

# Note that SqlTool can not shut down an embedded Derby database properly,

# since that requires an additional SQL connection just for that purpose.

# However, I've never lost data by shutting it down improperly.

# Other than not supporting this quirk of Derby, SqlTool is miles ahead of

# Derby's ij.

# Maria DB

# With current versions, the MySQL driver does not work to access a Maria

# database (though the inverse works).

# Driver jar files are of format like "mariadb-java-client*.jar"

# Port defaults to 3306

#urlid maria

#url jdbc:mariadb://hostname/db2

#username blaine

#password asecret

As noted in the comment (and as used in a couple examples), you

can use Java system properties like this: ${user.home}.

Windows users, please read the suggestion directed to you in the

file.

You can put this file anywhere you want to, and specify the

location to SqlTool/DatabaseManager/DatabaseManagerSwing by

using the --rcfile argument.

If there is no reason to not use the default location (and there

are situations where you would not want to), then use the default

location and you won't have to give --rcfile

arguments to SqlTool/DatabaseManager/DatabaseManagerSwing.

The default location is sqltool.rc or

dbmanager.rc in your home directory

(corresponding to the program using it).

If you have any doubt about where your home directory is, just

run SqlTool with a phony urlid and it will tell you where it

expects the configuration file to be.

java -jar $HSQLDB_HOME/lib/sqltool.jar x

There are cases where you can't use the RC file at the default location. You may have no home directory. Another directory may be more secure. Or you may have multiple RC files that you use for different purposes. Either way, you can specify any RC filepath (absolute or relative) like so:

java -jar $HSQLDB_HOME/lib/sqltool.jar --rcFile=path/to/rc/file.name urlid

If SqlTool can't open the specified RC file or there is a syntax error in it, you'll get a useful error message. You'll also get an error message if there is no match for the urlid value that you look up.

The config file consists of stanza(s) beginning

with urlid

urlid web.+, app.+

url jdbc:hsqldb:hsql://localhost

username web

password webspassword

Only the urlid field is required, and the

value of it must be a comma-delimited (with optional white-space

on either side) list of regular expression patterns which are

matched against candidate urlid lookups.

One urlid pattern may only appear in the file once, but a candidate

urlid may match multiple patterns, and that allows you to set

defaults and apply overrides, etc. (see paragraph below about that).

Be aware that after all of the matching for a lookup is done, the

results won't be usable to establish a connection unless you have

assigned at least a url value (more often than

not a username and password will also be required).

The URL may contain JDBC connection properties.

You can have as many blank lines and comments like

# This comment

in the file as you like. The whole point is that the urlid that you give in your SqlTool/DatabaseManager command must match at least one urlid in your configuration file so that values like url and username can be assigned to use for connection attempts.

![[Warning]](../images/db/warning.png) | Warning |

|---|---|

|

Use whatever facilities are at your disposal to protect your configuration file. |

The specified urlid patterns must match then entire lookup candidate urlid values. For example, a pattern of . would match lookup candidate "A" but not "AB". Pattern of .+ will always match.

Assignments are applied in the order they occur in the file, with lower assignments overriding earlier assignments. Therefore, you will generally want to use more general urlid patterns toward the top to specify default values, and more specific urlid patterns toward the bottom to specify overrides.

The file should be readable, both locally and remotely, only to

users who run programs that need it.

On UNIX, this is easily accomplished by using chmod/chown

commands and making sure that it is protected from

anonymous remote access (like via NFS, FTP or Samba).

You can also put the following optional settings into a urlid stanza. The setting will, of course, only apply to that urlid.

|

charset | This is used by the SqlTool program, but not by the DatabaseManager programs. See the Character Encoding section of the Non-Interactive section. This is used for input and output files, not for stdin or stdout, which are controlled by environmental variables and Java system properties. If you set no encoding for an urlid, input and outfiles will use the same encoding as for stdin/stdout. (As of right now, the charset setting here is not honored by the \j command, but only when SqlTool loads an urlid specified on the command-line). |

|

driver | Sets the JDBC driver class name. You can, alternatively, set this for one SqlTool/DatabaseManager invocation by using the command line switch --driver. Defaults to org.hsqldb.jdbc.JDBCDriver. |

|

truststore |

TLS trust keystore store file path as documented in the

TLS section of the Listeners chapter of the

HyperSQL User Guide

You usually only need to set this if the server is using a

non-publicly-certified certificate (like a self-signed

self-ca'd cert).

Relative paths will be resolved relative to the

${user.dir}

system property at JRE invocation time.

|

|

transiso |

Specify the Transaction Isolation Level with an all-caps

string, exactly as listed in he Field Summary of the Java

API Spec for the class

java.sql.Connection.

|

Property and SqlTool command-line switches override settings made in the configuration file.

The \j command lets you switch JDBC Data Sources in your SQL files

(or interactively).

"\?" shows the syntax to make a connection by either RCData urlid

or by name + password + JDBC Url.

(If you omit the password parameter, an empty string password will

be used).

The urlid variant uses RC file of

$HOME/sqltool.rc.

We will add a way to specify an RC file if there is any demand for

that.

You can start SqlTool without any JDBC Connection by specifying no Inline RC and urlid of "-" (just a hyphen). If you don't need to specify any SQL file paths, you can skip the hyphen, as in this example.

java -jar $HSQLDB_HOME/lib/sqltool.jar -Pv1=one

(The "-" is required when specifying one or more SQL files, in order to distinguish urlid-spec from file-spec). Consequently, if you invoke SqlTool with no parameters at all, you will get a SqlTool session with no JDBC Connection. You will obviously need to use \j before doing any database work.

Inline RC authentication setup is accomplished by using the

--inlineRc command-line switch on SqlTool.

The --inlineRc command-line switch takes

a comma-separated list of key/value elements.

The url and user elements

are required. The rest are optional.

The --inlineRc switch is the only case where

you can give SQL file paths without a preceding urlid indicator

(an urlid or -).

The program knows not to look for an urlid if you give an inline.

Since commas are used to separate each name=value

pair, you must do some extra work for any commas inside of the

values of any name=values.

Escape them by proceeding them with backslash, like

"myName=my\p,value" to inform SqlTool that the

comma is part of the value and not a name/value separator.

|

| The JDBC URL of the database you wish to connect to. |

|

| The username to connect to the database as. |

|

| Sets the character encoding. Overrides the platform default, or what you have set by env variables or Java system properties. (Does not effect stdin or stdout). |

|

| The TLS trust keystore file path as documented in the TLS chapter. Relative paths will be resolved relative to the current directory. |

|

|

java.sql.Connection transaction

isolation level to connect with, as specified in the Java

API spec.

|

|

|

You may only use this element to set empty password, like password= For any other password value, omit the

|

(Use the --driver switch instead of

--inlineRc to specify a JDBC driver class).

Here is an example of invoking SqlTool to connect to a standalone database.

java -jar $HSQLDB_HOME/lib/sqltool.jar --inlineRc=url=jdbc:hsqldb:file:/home/dan/dandb,user=dan

For security reasons, you cannot specify a non-empty password as an argument. You will be prompted for a password as part of the login process.

Both the \l command and all warnings and error messages now use

a logging facility.

The logging facility hands off to Log4j if Log4j is found in the

classpath, and otherwise will hand off to

java.util.logging.

The default behavior of java.util.logging

should work fine for most users.

If you are using log4j and are redirecting with pipes, you may

want to configure a Console Appender with target of

"System.err" so that error output will go to

the error stream (all console output for

java.util.logging goes to stderr by default).

See the API specs for Log4j and for J2SE for how to configure

either product.

If you are embedding SqlTool in a product to process SQL files,

I suggest that you use log4j.

java.util.logging is neither scalable nor

well-designed.

Run the command \l? to see how to use the

logging command \l in your SQL files (or

interactively), including what logging levels you may specify.

Do read the The Bare Minimum section before you read this section.

You run SqlTool interactively by specifying no SQL filepaths on the SqlTool command line. Like this.

java -jar $HSQLDB_HOME/lib/sqltool.jar urlid

Procedure 1.3. What happens when SqlTool is run interactively (using all default settings)

-

SqlTool starts up and connects to the specified database, using your SqlTool configuration file (as explained in the RC File Authentication Setup section).

-

SQL file

auto.sqlin your home directory is executed (if there is one), -

SqlTool displays a banner showing the SqlTool and SqlFile version numbers and describes the different command types that you can give, as well as commands to list all of the specific commands available to you.

You exit your session by using the "\q" special command or ending input (like with Ctrl-D or Ctrl-Z).

![[Important]](../images/db/important.png) | Important |

|---|---|

|

Any command may be preceded by space characters. Special Commands, Edit Buffer Commands, PL Commands, Macros always consist of just one line. These rules do not apply at all to Raw Mode. Raw mode is for use by advanced users when they want to completely bypass SqlTool processing in order to enter a chunk of text for direct transmission to the database engine. |

If you are really comfortable with grep, perl, or vim, you will instantly be an expert with SqlTool command-line editing. Due to limitations of Java I/O, we can't use up-arrow recall, which many people are used to from DosKey and Bash shell. If you don't know how to use regular expressions, and don't want to learn how to use them, then just forget command-recall. (Actually DosKey does work from vanilla Windows MSDOS console windows. Be aware that it suffers from the same 20-year old quirks as DOS command-line editing. Very often the command line history will get shifted and you won't be able to find the command you want to recall. Usually you can work around this by typing a comment... "::" to DOS or "--" to SqlTool then re-trying on the next command line).

Basic command entry (i.e., without regexps)

- Just type in your command, and use the backspace-key to fix mistakes on the same line.

- If you goof up a multi-line command, just hit the ENTER key twice to start over. (The command will be moved to the buffer where it will do no harm).

- Use the ":h" command to view your command history. You can use your terminal emulator scroll bar and copy and paste facility to repeat commands.

- As long as you don't need to change text that is already in a command, you can easily repeat commands from the history like ":14;" to re-run command number 14 from history.

- Expanding just a bit from the previous item, you can add on to a previous command by running a command like ":14a" (where the "a" means append).

- See the Macros section about how to set and use macros.

If you use regular expressions to search through your command history, or to modify commands, be aware that the command type of commands in history are fixed. You can search and modify the text after a \ or * prefix (if any), but you can't search on or change a prefix (or add or remove one).

When you are typing into SqlTool, you are always typing part of the immediate command. If the immediate command is an SQL statement, it is executed as soon as SqlTool reads in the trailing (unquoted) semi-colon. Commands of the other command types are executed as soon as you hit ENTER. The interactive : commands can perform actions with or on the edit buffer. The edit buffer usually contains a copy of the last command executed, and you can always view it with the :b command. If you never use any : commands, you can entirely ignore the edit buffer. If you want to repeat commands or edit previous commands, you will need to work with the edit buffer. The immediate command contains whatever (and exactly what) you type. The command history and edit buffer may contain any type of command other than comments and : commands (i.e., : commands and comments are just not copied to the history or to the edit buffer).

Hopefully an example will clarify the difference between the

immediate command and the edit buffer.

If you type in the edit buffer Substitution command

":s/tbl/table/", the :s command that you typed

is the immediate command (and it will never be stored to the

edit buffer or history, since it is a : command), but the purpose

of the substitution command is to modify the contents of the

edit buffer (perform a substitution on it)-- the goal being that

after your substitutions you would execute the buffer with the

":;" command.

The ":a" command is special in that when you hit ENTER to execute

it, it copies the contents of the edit buffer to a new immediate

command and leaves you in a state where you are

appending to that

immediate command (nearly) exactly as if

you had just typed it in.

You can run SqlTool interactively, but have SqlTool behave exactly as if it were processing an SQL file (i.e., no command-line prompts, error-handling that defaults to fail-upon-error, etc.). Just specify "-" as the SQL file name in the command line. This is a good way to test what SqlTool will do when it encounters any specific command in an SQL file. See the Piping and shell scripting subsection of the Non-Interactive chapter for an example.

Command types

|

SQL Statement |

Any command that you enter which does not begin with "\", ":", "* " or "/" is an SQL Statement. The command is not terminated when you hit ENTER, like most OS shells. You terminate SQL Statements with either ";" or with a blank line. In the former case, the SQL Statement will be executed against the SQL database and the command will go into the edit buffer and SQL command history for editing or viewing later on. In the former case, execute against the SQL database means to transmit the SQL text to the database engine for execution. In the latter case (you end an SQL Statement with a blank line), the command will go to the edit buffer and SQL history, but will not be executed (but you can execute it later from the edit buffer). (Blank lines are only interpreted this way when SqlTool is run interactively. In SQL files, blank lines inside of SQL statements remain part of the SQL statement). As a result of these termination rules, whenever you are entering text that is not a Special Command, Edit Buffer / History Command, or PL Command, you are always appending lines to an SQL Statement or comment. (In the case of the first line, you will be appending to an empty SQL statement. I.e. you will be starting a new SQL Statement or comment). |

|

Special Command | Run the command "\?" to list the Special Commands. All of the Special Commands begin with "\". I'll describe some of the most useful Special Commands below. |

|

Edit Buffer / History Command | Run the command ":?" to list the Edit-Buffer/History Commands. All of these commands begin with ":". These commands use commands from the command history, or operate upon the edit "buffer", so that you can edit and/or (re-)execute previously entered commands. |

|

PL Command |

Procedural Language commands.

Run the command " |

|

Macro Command | Macro definition and usage commands. Run the command "/?" to show the define, list, or use macros. |

|

Raw Mode | The descriptions of command-types above do not apply to Raw Mode. In raw mode, SqlTool doesn't interpret what you type at all. It all just goes into the edit buffer which you can send to the database engine. Beginners can safely ignore raw mode. You will never encounter it unless you run the "\." special command, or define a stored procedure or function. See the Raw Mode section for the details. |

Essential Special Commands

|

\? | In-program Help. Run this to show ALL available Special Commands instead of just the subset listed here! | ||||||||||||

|

\q | Quit | ||||||||||||

|

\j... | View JDBC Data Source details or connect up to a JDBC Data Source (replacing the current connection, if any). Run \? to see the syntax for the different usages. | ||||||||||||

|

\i path/to/script.sql |

Execute the specified SQL script, then continue again

interactively.

Since SqlTool is a Java program, you can safely use forward

slashes in your file paths, regardless of your operating

system.

You can use Java system properties like

${user.home}, PL variables like

*{this} and @ in your

file paths.

The last is mostly useful for \i statements inside of SQL files,

where it means the directory containing the

current script.

|

||||||||||||

|

\c true (or false) | Change error-handling (Continue-on-error) behavior from the default. By default when SqlTool is run interactively, errors will be reported but SqlTool will continue to process subsequent commands. By default when SqlTool is run non-interactively, errors will also cause SqlTool to stop processing the current stream (like stdin) or SQL file. The default settings are usually what is desired, except for SQL scripts which need to abort upon failures, even when invoked manually (including for interactive testing purposes). | ||||||||||||

|

\= |

Commit the current SQL transaction.

Most users are used to typing the SQL statement

commit;, but this command is crucial for

those databases which don't support the statement.

It's obviously unnecessary if you have auto-commit mode on.

|

||||||||||||

|

\m? | List a summary of DSV and CSV importing, and all available options for them. You can use variables in the file path specifications, as described for the \i command above. | ||||||||||||

|

\x? | Ditto. | ||||||||||||

|

\mq? | Ditto. | ||||||||||||

|

\xq? | Ditto. | ||||||||||||

|

\d? | List a summary of the \d commands below. | ||||||||||||

|

\dt [filter_substring] | |||||||||||||

|

\dv [filter_substring] | |||||||||||||

|

\ds [filter_substring] | |||||||||||||

|

\di [table_name] | |||||||||||||

|

\dS [filter_substring] | |||||||||||||

|

\da [filter_substring] | |||||||||||||

|

\dn [filter_substring] | |||||||||||||

|

\du [filter_substring] | |||||||||||||

|

\dr [filter_substring] | |||||||||||||

|

\d* [filter_substring] |

Lists available objects of the given type.

If your database supports schemas, then the schema name will also be listed. If you supply an optional filter substring, then only items which match the specified substring. will be listed. In most cases, the specified filter will be treated as a regular expression matched against the candidate object names. In order to take advantage of extreme server-side performance benefits, however, in some cases the substring is passed to the database server and the filter will processed by the server.

|

||||||||||||

|

\d objectname [[/]regexp] |

Lists names of columns in the specified table or view.

If you supply a filter string, then only columns with a name matching the given regular expression will be listd. (If no special characters are used, this just means that names containing the specified substring will match). You'll find this filter is a great convenience compared to other database utilities, where you have to list all columns of large tables when you are only interested in one of them.

To narrow the displayed information based on all column

outputs, instead of just the column names, just prefix the

expression with /.

For example, to list all INTEGER columns, you could run

|

This list here includes only the essential

Special Commands, but n.b. that

there are other useful Special Commands

which you can list by running \?.

(You can, for example, execute SQL from external SQL files, and

save your interactive SQL commands to files).

Some specifics of these other commands are specified immediately

below, and the

Generating Text or HTML Reports

section explains how to use the "\o" and "\h" special commands to

generate reports.

Be aware that the \! Special Command does

not work for external programs that read from standard input.

You can invoke non-interactive and graphical interactive programs,

but not command-line interactive programs.

SqlTool executes \! programs directly, it does

not run an operating system shell (this is to avoid OS-specific

code in SqlTool).

Because of this, you can give as many command-line arguments

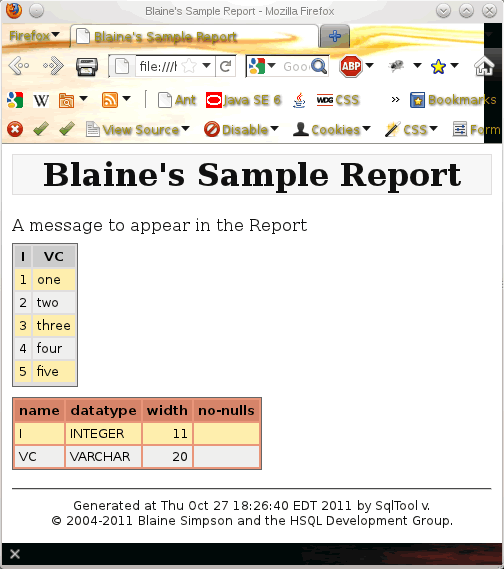

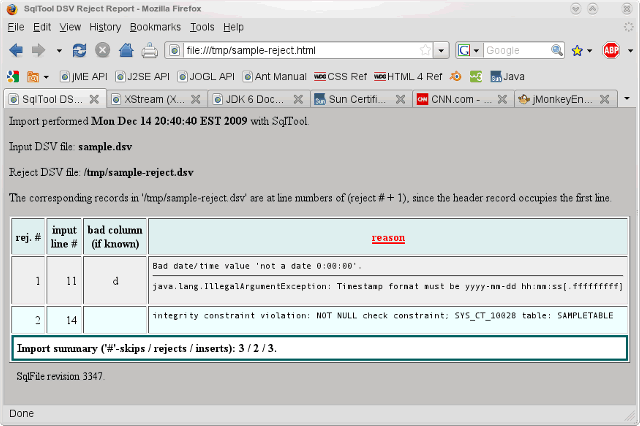

as you wish, but you can't use shell wildcards or redirection.

Edit Buffer / History Commands

|

:? | IN-program Help |

|

:b | List the current contents of the edit buffer. |

|

:h |

Shows the Command History.

For each command which has been executed (up to the max

history length), the SQL command history will show the

command; its command number (#); and also how many commands

back it is (as a negative number).

: commands are never added to the history list.

You can then use either form of the command identifier to

recall a command to the edit buffer (the command described

next) or as the target of any of the following : commands.

This last is accomplished in a manner very similar to the

vi editor.

You specify the target command number between the colon

and the command.

As an example, if you gave the command

:s/X/Y/, that would perform the

substitution on the contents of the edit buffer; but if you

gave the command :-3 s/X/Y/, that would

perform the substitution on the command 3 back in the

command history (and copy the output to the edit buffer).

Also, just like vi, you can identify the command to recall

by using a regular expression inside of slashes, like

:/blue/ s/X/Y/ to operate on the last

command you ran which contains "blue".

|

|

:13 OR :-2 OR :/blue/ |

Recalls a command from Command history to the edit buffer. Enter ":" followed by the positive command number from Command history, like ":13"... or ":" followed by a negative number like ":-2" for two commands back in the Command history... or ":" followed by a regular expression inside slashes, like ":/blue/" to recall the last command which contains "blue". The specified command will be written to the edit buffer so that you can execute it or edit it using the commands below. As described under the :h command immediately above, you can follow the command number here with any of the commands below to perform the given operation on the specified command from history instead of on the edit buffer contents. So, for example, ":4;" would load command 4 from history then execute it (see the ":;" command below). |

|

:; | Executes the SQL, Special or PL statement in the edit buffer (by default). This is an extremely useful command. It's easy to remember because it consists of ":", meaning Edit Buffer Command, plus a line-terminating ";", (which generally means to execute an SQL statement, though in this case it will also execute a special or PL command). |

|

:a |

Enter append mode with the contents of the edit buffer (by default) as the current command. When you hit ENTER, things will be nearly exactly the same as if you physically re-typed the command that is in the edit buffer. Whatever lines you type next will be appended to the immediate command. As always, you then have the choice of hitting ENTER to execute a Special or PL command, entering a blank line to store back to the edit buffer, or end a SQL statement with semi-colon and ENTER to execute it. You can, optionally, put a string after the :a, in which case things will be exactly as just described except the additional text will also be appended to the new immediate command. If you put a string after the :a which ends with ;, then the resultant new immediate command will just be executed right away, as if you typed in and entered the entire thing.

If your edit buffer contains

You may notice that you can't use the left-arrow or backspace key to back up over the original text. This is due to Java and portability constraints. If you want to edit existing text, then you shouldn't use the Append command. |

|

:s/from regex/to string/switches |

The Substitution Command is the primary method for SqlTool command editing-- it operates upon the current edit buffer by default. The "to string" and the "switches" are both optional (though the final "/" is not). To start with, I'll discuss the use and behavior if you don't supply any substitution mode switches. Don't use "/" if it occurs in either "from string" or "to string". You can use any character that you want in place of "/", but it must not occur in the from or to strings. Example :s@from string@to string@ The to string is substituted for the first occurrence of the (case-specific) from string. The replacement will consider the entire SQL statement, even if it is a multi-line statement.

In the example above, the from regex was a plain string, but

it is interpreted as a regular expression so you can do

all kinds of powerful substitutions.

See the Don't end a to string with ";" in attempt to make a command execute. There is a substitution mode switch to use for that purpose. You can use any combination of the substitution mode switches.

If you specify a command number (from the command history), you end up with a feature very reminiscent of vi, but even more powerful, since the Perl/Java regular expression are a superset of the vi regular expressions. As an example, :24 s/pin/needle/g; would start with command number 24 from command history, substitute "needle" for all occurrences of "pin", then execute the result of that substitution (and this final statement will of course be copied to the edit buffer and to command history). |

|

:w /path/to/file.sql | This appends the contents of the current buffer (by default) to the specified file. Since what is being written are Special, PL, or SQL commands, you are effectively creating an SQL script. To write some previous command to a file, just restore the command to the edit buffer with a command like ":-4" before you give the :w command. |

I find the ":/regex/" and ":/regex/;" constructs particularly handy for every-day usage.

:/\\d/;

re-executes the last \d command that you gave (The extra "\" is needed to escape the special meaning of "\" in regular expressions). It's great to be able to recall and execute the last "insert" command, for example, without needing to check the history or keep track of how many commands back it was. To re-execute the last insert command, just run ":/insert/;". If you want to be safe about it, do it in two steps to verify that you didn't accidentally recall some other command which happened to contain the string "insert", like

:/insert/

:;

(Executing the last only if you are satisfied when SqlTool reports what command it restored). Often, of course, you will want to change the command before re-executing, and that's when you combine the :s and :a commands.

We'll finish up with a couple fine points about Edit/Buffer commands. You generally can't use PL variables in Edit/Buffer commands, to eliminate possible ambiguities and complexities when modifying commands. The :w command is an exception to this rule, since it can be useful to use variables to determine the output file, and this command does not do any "editing".

The :? in-program help explains how you can change the default

regular expression matching behavior (case sensitivity, etc.), but

you can always use syntax like "(?i)" inside of your regular

expression, as described in the Java API spec for class

java.util.regex.Pattern.

History-command-matching with the /regex/ construct is

purposefully liberal, matching any portion of the command,

case sensitive, etc., but you can still use the method just

described to modify this behavior. In this case, you could

use "(?-i)" at the beginning of your regular expression to

be case-sensitive.

The SQL history shown by the :h command, and used by other commands,

is truncated to 100 entries, since its utility comes from being

able to quickly view the history list.

You can change the history length by setting the system property

sqltool.historyLength to the desire integer

value (using any of the System Property mechanisms provided by

Java).

If there is any demand, I'll make the setting of this value more

convenient.